Sparse Continual Learning on the Edge

NeurIPS 2022 paper.

TLDR

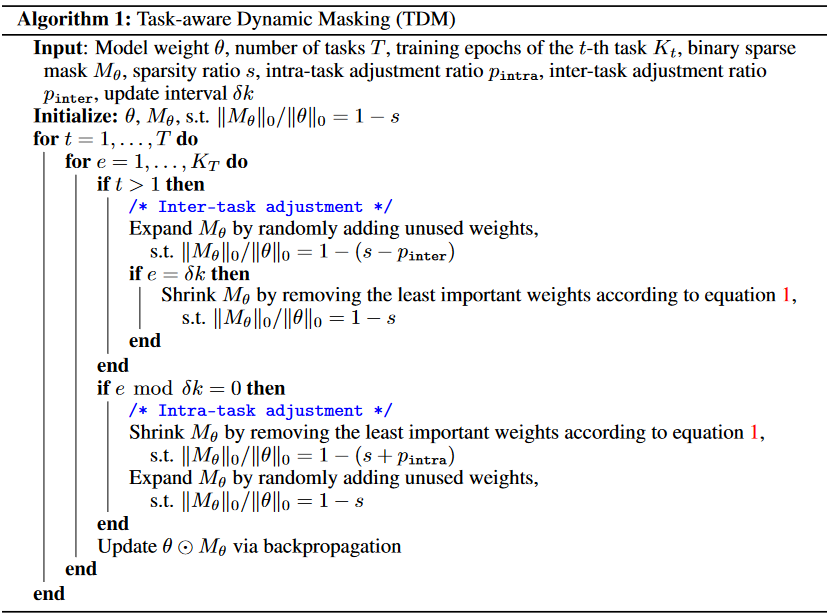

- Task-aware dynamic mask TDM :

Based on $CWI$, keep only important weights for both current & past tasks.

++ consider task transition - Dynamic Gradient Masking DGM :

Based on $CGI$, select important weights to update,

only a subset of sparse weight updated to leverage gradient sparcity.

Quick Look

- Authors & Affiliation: Zifeng Wang

- Link: https://arxiv.org/pdf/2209.09476.pdf

- Comments: NeurIPS 2022

- Relevance: 4.5

Research Topic

- Category (General): Continual Learning

- Category (Specific): Continual Learning, Lottery Ticket Theorem

Paper Summary (What)

- Use two types of masks, $M_w$ and $M_g$ based on slightly different importance metrics.

- Dynamic Data Removal DDR : remove ‘easy’ examples regarding to loss,

keep more informative examples in the replay buffer. - $CWI$ for $M_w$

\(CWI = \text{L1 norm of }W + \text{gradient w.r.t task data } D_t + \text{gradient w.r.t rehearsal buffer} R\) - $CGI$ for $M_g$

\(CGI = \text{grad w.r.t task data }D_t + \text{gradient w.r.t rehearsal buffer} R\) - Update mask by retaining weights with high $CWI$ or $CGI$ value.

Notable References

Thoughts

Isn’t $CWI$ and $CGI$ are redundant metrics, i.e. not orthogonal enough?

Applying different masks for forward/backward phase is notable.

Somewhat very similar with my idea, but somewhat different from what I thought.

Footnote

아래와 같은 양식을 활용한다.

#

## Quick Look

- **Authors & Affiliation**: [Authors][Affiliations]

- **Link**: [Paper link]

- **Comments**: [e.g. Published at X / arXiv paper / in review.]

- **TLDR**: [One or at most two line summary]

- **Relevance**: [Score between 1 and 5, stating how relevant this paper is to your work. Usually filled in at the end.]

# Research Topic

- **Category** (General):

- **Category** (Specific):

# Paper Summary (What)

[Summary of the paper - a few sentences with bullet points. What did they do?]